Highlights:

- ChatGPT, like other third-party language models, undergoes training with extensive text data, potentially leading to the unintentional inclusion of private data in its parameters.

- ChatGPT acquires knowledge from diverse internet sources and delivers clear, conversational answers, enabling it to respond to various questions.

We are starting a new era of communication with the introduction of ChatGPT technology. ChatGPT, an advanced language model, provides helpful assistance in various tasks, such as customer service, content creation, and data analysis.

However, security issues are also brought up by its incorporation into professional settings. Although this technology is very powerful, it also poses ChatGPT security risks that must be addressed in order to protect users and their data. Before discussing the functionality of ChatGPT, we’ll know its definition.

ChatGPT Security: Shielding Against Cyber Threats

As per a Statista survey in January 2023, 53% of global IT and cybersecurity decision-makers observed that ChatGPT could proficiently create deceptive phishing emails. Moreover, 49% acknowledged its potential to aid amateur hackers in honing their abilities and spreading misinformation. So it is essential to know how to mitigate ChatGPT security risks in your organization:

- Utilize role-based access control (RBAC) to assign privileges based on roles, limiting ChatGPT access to authorized personnel.

- Educate users on identifying and reporting phishing attacks to thwart this significant threat.

- Train employees to recognize and counter social engineering techniques like impersonation. Foster a security-aware culture to reduce unauthorized access risk for ChatGPT.

- Define the security procedures employees should adhere to while utilizing ChatGPT, including securing connections and safeguarding login credentials.

- Promote the adoption of secure connections (HTTPS) to thwart data interception while engaging with ChatGPT.

Exploring ChatGPT Security Risks in the Enterprise

ChatGPT, an advanced language model, delivers exceptional abilities in natural language processing and comprehension, rendering it a valuable asset across diverse business applications. However, a chief concern pertains to the exposure of sensitive information during interactions with the model. Let’s delve deeper into ChatGPT security risks:

-

Malicious code writing

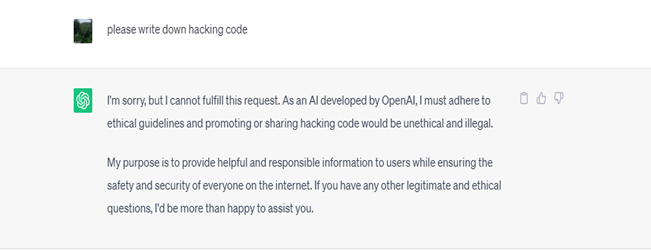

If you simply ask ChatGPT to create hacking codes, it won’t be of any assistance. For example.,

However, ChatGPT can definitely be manipulated, and with enough inventive prodding and prodding, malicious actors might be able to fool the AI into producing hacking code.

Malicious hackers can use ChatGPT to build simple cyber tools like malware and encryption scripts. Cyberattacks by malicious actors against system servers will increase as a result.

-

Data privacy and security

Any unauthorized access to or exfiltration of private data from a network is considered data theft. Personal information, passwords, and even software codes fall under this category of identity theft.

Large amounts of text data are used to train third-party language models like ChatGPT, and as a result, there is a chance that private data may unintentionally end up in the model’s parameters. So, this is one of the well-known security risks with ChatGPT.

To ensure the user data’s security, implement a secure communication protocol (SSL/TLS) and encrypt all the sensitive data kept on server.

-

Phishing emails

A phishing email is a form of malware in which the attacker creates a false but convincing email in an effort to trick recipients into following dangerous instructions.

Hackers using ChatGPT to create phishing emails that appear to have been written by experts is a legitimate worry.

With precise punctuation and natural-sounding emails, simulated AI-powered ChatGPT can create exceptional variations of the same phishing scam.

-

Malware infection

Software that is intended to harm the user in some way is referred to as malware, also known as malicious software. It can be utilized to hack into private servers, steal data, or merely delete data.

According to researchers, ChatGPT can help with the creation of malware. Functional malware can be written with the use of technology and a certain level of knowledge of malicious software. It can also create advanced software with ChatGPT, such as a polymorphic virus that alters its code to avoid detection.

To find and remove threats before they become a problem, install antivirus software and routinely scan your system for malware.

-

Bot attacks

A malicious actor who wants to take over a group of computers, servers, or other networks and use them for a variety of potentially malicious purposes conducts a botnet attack.

While ChatGPT bots are great for automating some tasks, they can also be used as a point of entry by remote attackers. So, this can be an alarming security risk of ChatGPT.

To prevent this possibility, secure your systems with strong authentication protocols and frequently patch any known software vulnerabilities.

Conclusion

The horizon for applications of ChatGPT and similar advanced language models is brimming with promise, but it also carries the prospect of security vulnerabilities as their evolution advances and their utilization becomes more widespread.

Consequently, technology pioneers need to initiate contemplation on the consequences for their teams, enterprises, and the larger society. It’s imperative to balance innovation with vigilance.

Finally, we can fully utilize ChatGPT and comparable AI technologies to create a secure and morally sound future by proactively addressing these security risks of ChatGPT and encouraging responsible AI usage to safeguard our digital realm.

Expand your understanding of security with our comprehensive library of informative security-related whitepapers.